In this tutorial, we are going to make a CNN model inference software.

That software loads data/VGG16.onnx and takes input image, then outputs classification result.

We start simple hello world code:

Preprocessing input

First of all, preprocessing input is required. data/VGG16.onnx takes 3 channels 224 x 224 sized image but input image is not always sized 224x224. So we use resize() function in OpenCV :

VGG16 supposes that the input image is subtracted the mean values of images included in imagenet.

Menoh takes images as NCHW format(N x Channels x Height x Width), but Mat of OpenCV holds image as HWC format(Height x Width x Channels).

So next we define reorder_to_chw.

The main code of preprocessing input is:

Setup model

ONNX model has some named variables. To build model, we have to set names of input variables and output variables.

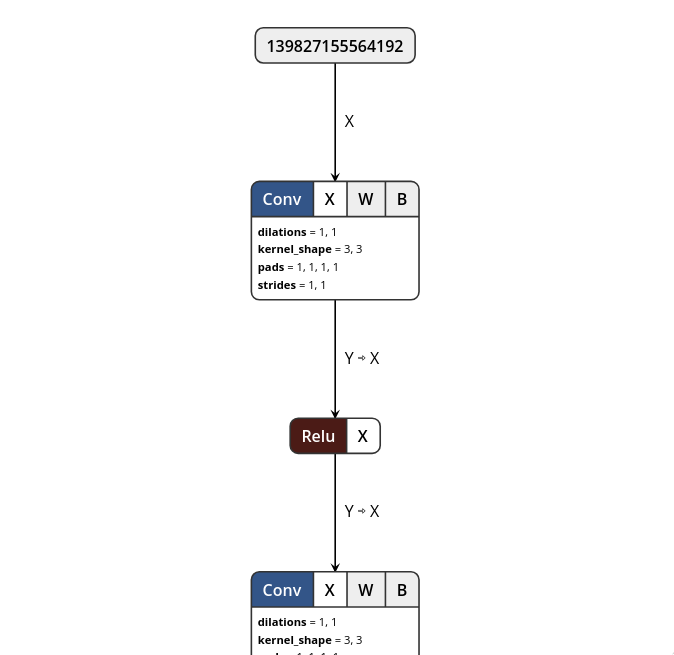

We can checks them with Netron:

Then you can see the content by accessing localhost:8080 with browser like below.

VGG16 has one input and one output. So now we can check that the input name is 140326425860192 (input of 0:Conv) and the output name is 140326200803680 (output of 39:Softmax).

Some of we are interested in the feature vector of input image. So in addition, we are going to take the output of 32:FC(fc6, which is the first FC layer after CNNs) named 140326200777584.

We define name aliases for convenience:

We load model data from ONNX file:

To build the model, we have to build variable_profile.

To build variable_profile, we make variable_profile_builder.

We add information of variables.

Then build variable_profile_table.

variable_profile_table has the dimensions of output variables calculated from the dimensions of input variable. So we can get output dims from variable_profile_table.

Let's prepare fc6 data buffer.

We now can make model_builder.

We can specify which data buffer is used for target variable by attaching.

For softmax_out_name variable, no buffer is attached here. Don't worry.

An intenal buffer is attached to softmax_out_name variable automatically.

And we can get that buffer handle later.

Let's build the model.

Run inference and get result

Now we can run inference.

We can take output variable by calling get_variable.

That's it.

You can see the full code in example/vgg16_example_in_cpp.cpp.

1.8.14

1.8.14