Abstract

TL;DR Neural radiance fields can be edited via decomposition with arbitrary queries and feature fields distilled from pre-trained vision models.

Results

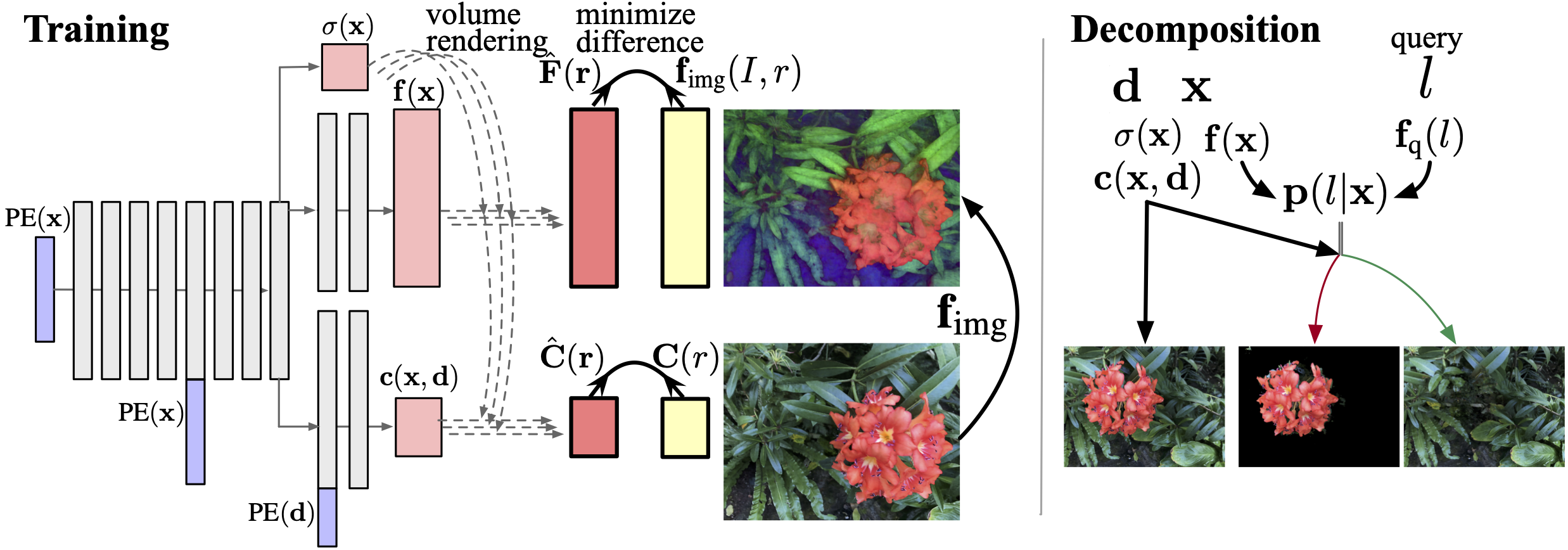

Our distilled feature fields (DFFs) are trained by distilling 2D vision encoders such as LSeg (CLIP-based zero-shot segmentation model) or DINO (self-supervised model with good performances in part correspondence tasks) without any 3D or 2D annotations. The learned feature fields can map every 3D coordinate to a semantic feature descriptor of that coordinate as well as radinace fields. We can segment the 3D space and the corresponding radiance fields by querying a feature vector and calculating scores via dot product.

Query-based Scene Decomposition

Distilled feature fields enable NeRF to decompose a specific object with a text query like "flower" or an image-patch query.

Raw rendering

Extraction

Deletion

Localizing CLIPNeRF via Scene Decomposition

CLIPNeRF optimizes a NeRF scene with a text prompt. However, naive CLIPNeRF poisons unintentional parts. We can combine our decomposition method with CLIPNeRF and selectively optimize the target object.

naive CLIPNeRF

+ Our method

white flower

white flower

yellow flower

sunflower

rainbow flower

petunia

Other Editings

We can edit decomposed objects of NeRF scenes in various ways, e.g., rotate, translate, scale, warp into another scene, colorize, or delete them.

move and deform apple

(LSeg field)

warp horns

(DINO field)

colorize

light

chair

television

floor

delete

(Note that the background behind the deleted objects can be noisy or have a hole because it lacks observation.)

light

chair

television

floor

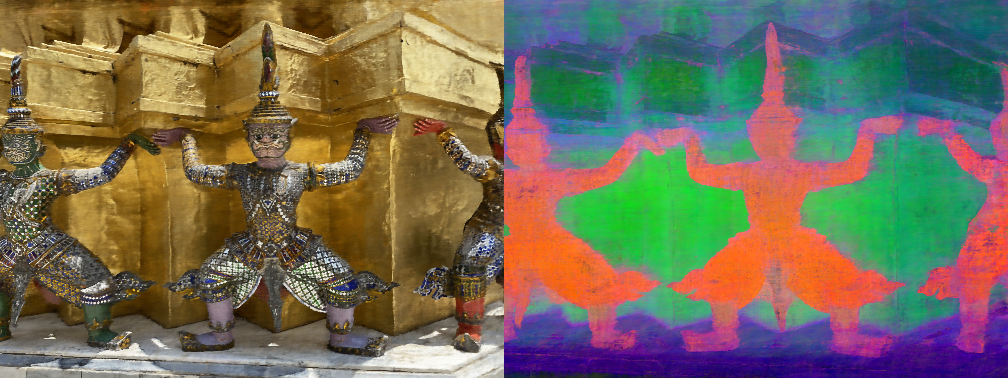

LSeg Feature Field

We visualize a feature field distilled from LSeg as a techer network by PCA. The flower scene is from LLFF

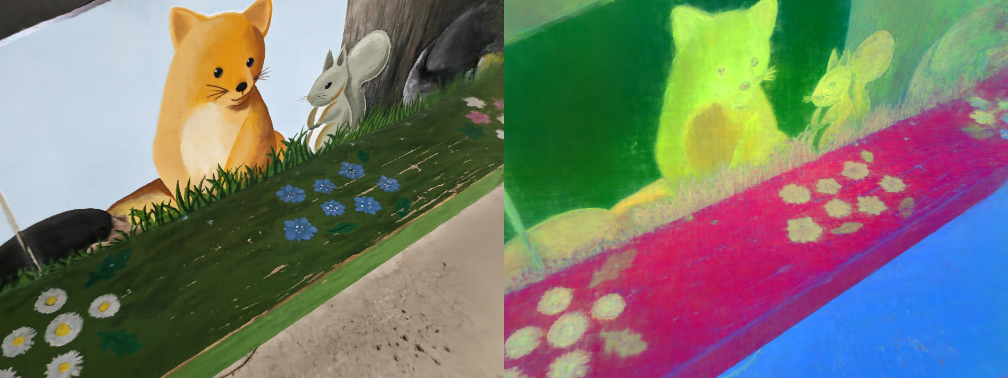

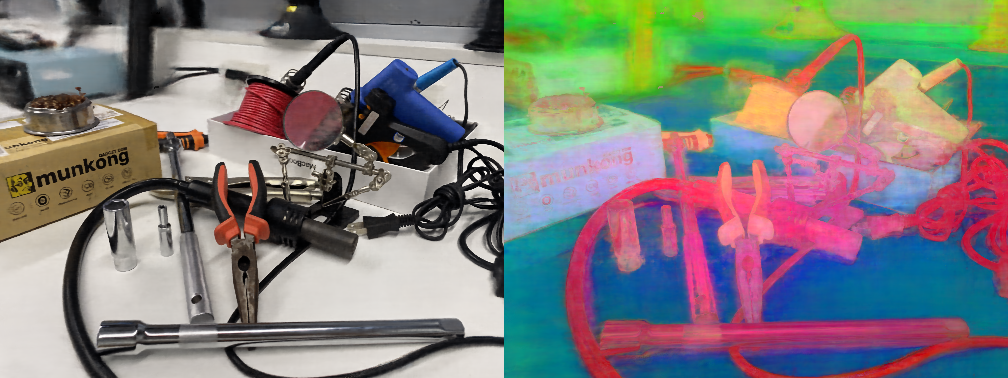

Other DINO Feature Fields

We visualize feature fields distilled from DINO as a techer network by PCA. Scenes are from LLFF, shiny dataset, and our dataset.